There’s perhaps some sort or cruel irony somewhere following WPP’s news that the network had been hacked by malware, bringing down some of its computer systems.

WPP has, of course, been at the forefront of demanding that tech platforms do more to police the content that they provide – although it too has been caught out by the vagaries on the web. Never mind, brand safety – its own online presence was compromised.

While its technicians worked quickly to resolve and patch up the problem, the issue for brands is rather more of an enduring one. Rarely a month goes by without reports of brands that have unwittingly given residual advertising revenue to Islamist and other extremist groups. In the most recent reported incident it was particularly embarrassing as some of the brands were political parties.

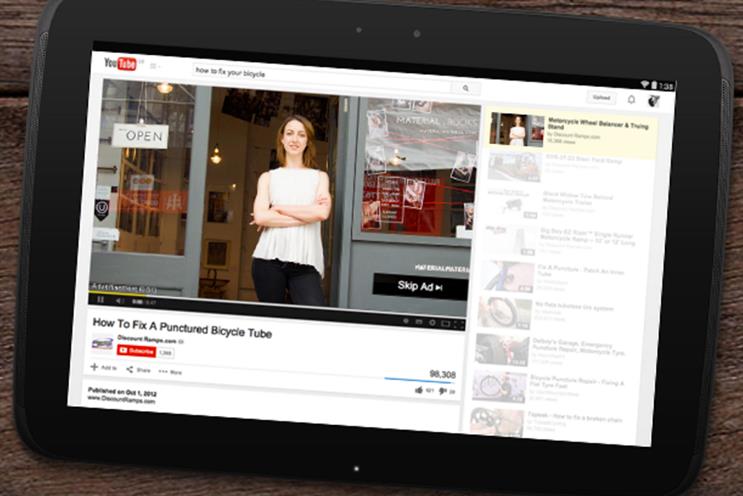

The brands didn’t know, obviously, but the chain of events is set: extremist material finds its way onto YouTube at speed and before people are able to take it down. It is then tagged for monetisation (despite Google recently changing the rules surrounding which channels can be monetised following a previous Times investigation, new channels can still earn ad revenue if they have more than 10,000 views).

As we’ve seen, a blame game then follows. YouTube has so far been unable to remove extremist content at the necessary lightening speed, and is yet to introduce filtering technology of sufficient strength to exclude extreme content. Media agencies are also partly culpable due to the way that media is traded – the need for speed and greed has fuelled programmatic buying. There is a brief period of uproar from all sides, and then it all goes quiet until the next scandal flairs up in a few months time.

To break this cycle we must welcome innovations and clever new mechanics predicated on smart targeting, savvy platform development, and brilliantly appropriate content, which an audience actually wants.

In fairness to Google, it is searching for solutions but finding a catch all blanket solution will be incredible tough. It cannot therefore solely be the responsibility of the platform, just as WhatsApp cannot take all the blame for providing terrorists with a means to communicate through its platform.

If a platform’s core purpose is based on the fundamental freedom that allows users to upload content as desired, it is currently impossible to ‘scan’ such content during this process to determine what might be considered extremist propaganda. Aside from the issues of subjectivity and nuance, the technology just isn’t there yet.

So that’s why we need the thinking that breaks the blame cycle, something we’ve tried to get to grips with at Copa90. One potential solution is to create branded content streams, such as our own FC90 on Facebook, which provides authenticated, authorised, content to an audience that is interested in viewing it. In this way, brands are guaranteed to appear in an environment that is completely and utterly suited to them as well as highly targeted.

This model has far reaching consequences for industry, placing an emphasis on publishers and platforms to develop and evolve social advertising products for mutual benefit, providing a better option to brands than the reflex blanket media buys of the last few years. Brands want, in fact demand, this. But for it to happen media agencies need to be prepared to break with their buying processes.

If we are in a new advertising era where content is crucial in reaching audiences that are proving resistant to advertising messages, new bespoke platforms represent a way forward. They allow brands to hand pick their involvement with their audience by providing an incredibly deep level of relationship in a safe and trusted environment. And, from an industry perspective, they also help break the cyclical problems we currently face and which no-one – brands, media owners, and agencies – can afford to ignore.

James Kirkham is head of Copa90